Durga Puja in Odisha

Friday, September 18, 2020

Thursday, September 17, 2020

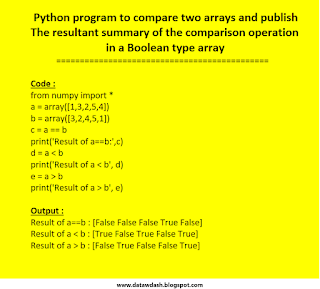

python program to compare two arrays and publish the resultant summary of the comparison operation in a boolean type array

python program to compare two arrays and publish the resultant summary of the comparison operation in a boolean type array

Monday, September 14, 2020

Technology challenges and solutions of big data - Variety

Technology challenges and solutions of big data - Variety

===============================================

Description

To efficiently store large and small data objects and data formats

Solution

Columnar databases using key-pair values format

Technology Used

HBase , Cassandra

what are some technology challenges for big data

what are some technology challenges for big data

what are some technology challenges for big data

- Storing Huge Volumes of Big Data

The very first and foremost challenge over the technology challenges of big data relates to the storing of huge quantities of data . As of now , there is no such computing machine with a storage thatis as big and enough to store the ongoing growing volume of data which has been a big cause of issue for most of the organisations engaged in the business of big data . Therefore , one should first try to store the huge quantity of data in manageable & inexpensive machines and then with upscaling prices and costs one could strive for better infrastructure . However , as it is customary with machines , machines are prone to getting failure at any random point of time and with more and more machines over the big data ecosystem , the chances of failure at any time may also becomes high . Each of the participative and implementable machines in the list could fail at some point or another and failure of a machine could entail a

loss of valuable data stored over them .

- So the first function of big data technology thus should be to store huge volumes of data within them and that too without incurring a high cost to the organization , while also combatting the risk of data loss . So all big data systems distribute data across a large cluster of inexpensive machines connected with each other over the big data network . This process also ensures that all of the data within the system is made failproof by ensuring that every piece of data is stored on multiple machines which would guarantee that at least one copy of the data is available to all the connected storage machines . All the above-mentioned processes are made fail-proof with the help of Hadoop which is a very well-known clustering technology of big data . Hadoop's data storage pattern is called as Hadoop Distributed File System (HDFS). This technology HDFS is built on the pattern's of Google's Big File Systems , which is designed to store billions of pages and sort the pages

to answer user search queries .

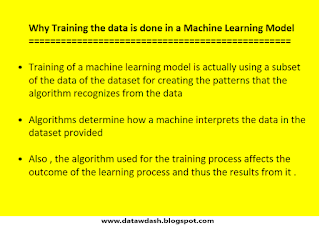

Training in Machine Learning

Training in Machine Learning

Training in Machine Learning

========================

- Training provides a learner algorithm with examples

of the desired inputs and also the results expected

from those inputs .The learner algorithm uses these

inputs to create a function

- Training in machine learning context is the process

where a learner algorithm maps a function to the data

and matches up with the inputs to their expected

Outputs

Back-propagation in machine learning

Back-propagation in machine learning

Back-propagation in machine learning

===============================

- Back-propagation or backward propagation of errors

is a method to determine the conditions under which

errors are removed form a neural network which is

built to resemble the human's neurons functions

by changing the weights and biases of the network

continually with a goal to arrive at an actual output

which would match the target output .

- The neurons present in the network catch the transmitted

information and relay the information along to

the next neuron in the line . And in this process , the

entire network is built for relaying the information from

the source of information propagation to the desired

target and in this way each neuron in the network is

shared a portion of the total information relayed and

as such all neurons keep passing information to next

neuron in line until the set of neurons create a final

Output .Thr total sum of errors at the rsult / target ise calculated by the method of Back-propagation

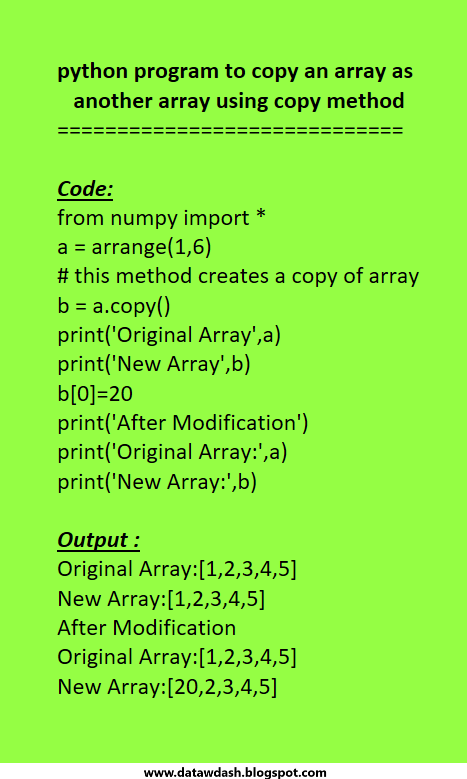

python program to copy an array as another array using copy method

python program to copy an array as another array using copy method

python program to copy an array as

another array using copy method

=============================

Code:

from numpy import *

a = arrange(1,6)

# this method creates a copy of array

b = a.copy()

print('Original Array',a)

print('New Array',b)

b[0]=20

print('After Modification')

print('Original Array:',a)

print('New Array:',b)

Output :

Original Array:[1,2,3,4,5]

New Array:[1,2,3,4,5]

After Modification

Original Array:[1,2,3,4,5]

New Array:[20,2,3,4,5]

Technology Challenges for Big Data

Technology Challenges for Big Data

Question - Technology Challenges for Big Data

--------------------------------------------------------------

Ingesting Streams at an extremely fast pace . This

relates to the velocity of streaming of big data over

the enterprise systems . This velocity of big data

generally relates to the torrential and fast streams of

data . In some cases the velocity of the data streams

may be very large and fast to be stored , but still then

the pace of the data inflow should be monitored

and this can be done by creation of special purpose

ingesting systems that could open multiple number of

channels for receiving , utilisation and consumption

of the data . These ingesting systems could be used

for holding data in queues from which business

applications can read and process data at their own

pace and convenience.

The second layer and second most important purpose

to solve for big data systems is thus managing the

challenge posed by velocity of big data . And to deal

with this issue , special stream-processing engines

have been put in place where all the incoming data is

fed into a central queueing system over the network

of big data machines . From this system , a fork

shaped system sends data to the batch storage as well

as to the stream processing directions . These stream

processing engines can do the work of collection

of high velocity big data and send it to the batch

processing systems who stream the incoming data in

multiple batches and redistribute the volume among

the batch segregation systems . A most popular

system for this type of work handling is apache spark

which handles the work of streaming applications .

Sources of Data over a Big Data Ecosystem

Sources of Data over a Big Data Ecosystem

Sources of Data over a Big Data Ecosystem

===================================

01) Human - Human communications

For example social media like Facebook , Instagram ,

twitter , streaming videos and comments which generate

a lot of one-to-one or one-to-many forms of data

propagation which could be used to store and analyse

sentiments and patterns among the collected data

02) Human - Machine communications

For example web and smart devices over where many people visit and access the required resource for example over an

E-commerce site over where a person / user puts in

requests for products over the page and also accesses

various other products over the displayed section of

any particular attribute or feature or commodity

03) Machine - Machine communications

Communication between the internet of things like mobile to modem communication , mobile to other handheld devices communication connected over internet , health tracking and monitoring fitness bands , seismic activity recorders , weather monitoring and tracking sensors , etc

04) Business transactions

This may include any of the above means and modes of circulation of Information

variety of data - big data

variety of data - big data

Variety of Data

- Big Data is inclusive of the forms of data for all kinds

of functions and from all sources and devices . If

traditional data forms such as invoices and ledgers

were like a small store then Big data can be considered

as the biggest imaginable shopping mall that offers

unlimited variety .

- The three major kinds of variety of data are as follows :

01) FORM OF DATA :

Data types range in variety from numbers to text ,

graph , map , audio , video and many other forms with

some of the data types being simple and the othes

being very complex . There can be composites of

data that include many elements in a single file . For

example , text documents have graphs and pictures

embedded in them . Video movies have audio songs

embedded in them . Audio and Video have different

and vastly much complex storage formats than

numbers and text .Numbers and text can be more

easily analysed than audio or video file

02) FUNCTIONS OF DATA :

There is a lot of data that is being generated form human conversations , songs and movies , business transactions records , machine operations performance data and anew

product design data , old archived data , etc . Human

communication data is needed to be processed very

communication data is needed to be processed very

much differently form operational performance data

with different expectations and objectives . Because

of all these aspects , Big data technologies can be

used to recognise people's faces in ictures , compare

voices to identify speaker of the voice and compare

handwritings to identify the writer also .

03) SOURCE OF DATA :

Mobile phones and tablet devices enable a wide series of applications ( or apps ) to access data and also generate data from anytime and anywhere . Web access and usage and search logs are another new and huge source of data . Businesses and enterprise systems generate massive amounts of structured business transactional information .Temperature and pressure sensors on machines , and Radio Frequency (RFID) tags on assets , generate incessant and repetitive data .

Overall it can summarised as there are three forms of

data and data sources :

(i) human - human communications

(ii) human -machine communications

(iii) machine to machine communications

Defining and Understanding Big Data

Defining and Understanding Big Data

Defining Big Data

===============

* Big Data in quantitative sense implies a huge amount of data with a large database engine with higher complexity and depth

* The sheer amount of data considered as big data is so large

that humans cannot visualize it reasonably without employing machine learning models for any kind of recognition as machine learning enables understanding and use of big data

* A big data source describes a repository that one can get data from and start working over that data to solve general problems with multiple variables and degrees to act upon the data variables

* Big Data involves acquisition of large data and datasets which is a very daunting and challenging job . Also , a big challenge associated with Big Data is the complicated process of storage and transfer of the data for effective processing .

* Another big concern about Big Data is Anonymity as big data presents a lot of concerns about Privacy and Data Protection as well .However , if big data is employed for machine learning problems then privacy and personal data processing might not be such a big issue as the goal of machine learning is to make models for patterns identification and predictions .

How does Machine Learning enable Artificial Intelligence to perform tasks

How does Machine Learning enable Artificial Intelligence to perform tasks

How does Machine Learning enable Artificial

Intelligence to perform tasks

=========================================

1) Machine Learning helps in detection of patterns in

all sorts of data sources

2) Machine Learning helps in creation of new models

based on the recognised patterns and behaviours

3) Machine Learning helps in making decisions

based on the success and failure of the patterns and

Behaviours

Data Handling technique of Machine Learning and Statistics

Data Handling technique of Machine Learning and Statistics

Data Handling technique of Machine Learning and Statistics

=================================================

* Machine Learning works with Big Data in the form of networks and graphs ; raw data from sensors and internet , and the data collected is split into training and test data

* In Statistics , statistical models are created to create the prediction on smaller samples and further analysis for future

data based on earlier past data

What are the distinguishing property of arrays

What are the distinguishing property of arrays

What is the distinguishing property of arrays

====================================

Arrays are a data structure which can increase or

decrease their size in memory at runtime. So , one need

not specify the size of the array at the time of creation

of the array.

What are the types of arrays in data-structure

What are the types of arrays in data-structure

What are the types of arrays in data-structure

======================================

There are two types of arrays :

1) single dimensional arrays or 1D arrays

2) Multi-Dimensional Arrays or 2D arrays

-

what is variance in statistics and how to calculate variance of a small sample - a summary

-

390 - python program to accept two numbers from the keyboard and find their sum python program to accept two numbe...